ClawBands: A Security Middleware That Puts Human-in-the-Loop Controls on OpenClaw AI Agents

A lightweight TypeScript plugin that hooks into OpenClaw's tool execution pipeline, enforcing approval workflows before your AI agent can write files, run shell commands, or make network requests. Think sudo for your AI agent.

ClawBands is a security middleware for OpenClaw AI agents.

OpenClaw has exploded in popularity as a personal AI assistant platform, with over 150,000 GitHub stars and coverage from Fortune, IBM, and VentureBeat. But as Fortune recently highlighted, giving an AI agent the ability to execute shell commands, modify files, and access your APIs creates real security risks: data exfiltration, unintended command execution, and prompt injection attacks.

ClawBands is a new open-source project by SeyZ that directly addresses this problem. It's a security middleware that hooks into OpenClaw's before_tool_call plugin event, intercepting every tool execution and enforcing human approval before dangerous actions run. The agent literally pauses and waits for your decision before proceeding.

// The Problem It Solves

OS-level isolation (containers, VMs) protects your host machine from a rogue AI agent. But it doesn't protect the services your agent already has access to: your GitHub repos, your APIs, your file system, your smart home devices. If an agent gets hijacked via prompt injection or simply makes a bad decision, it can do real damage within its authorized scope.

ClawBands sits between the agent's intent and the actual execution. Every tool call passes through a policy engine that decides whether to allow it immediately, block it outright, or pause and ask a human. Nothing executes without passing through this gate.

// How It Works

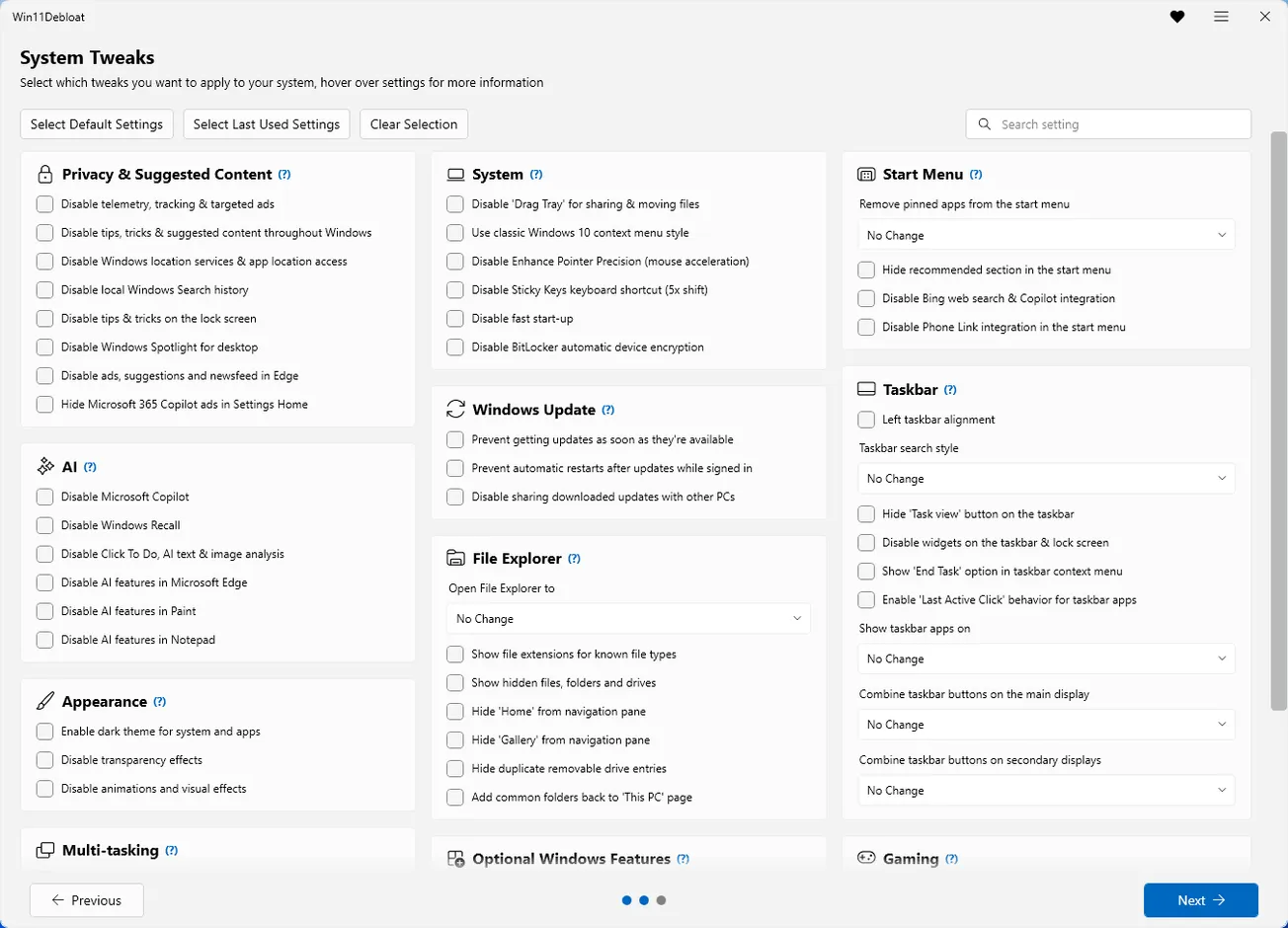

The plugin registers with OpenClaw's event system and intercepts every tool call before execution. It maps each tool to a module (FileSystem, Shell, Network, Browser, Gateway) and applies a configurable security policy. In terminal mode, you get an interactive prompt. On messaging channels (WhatsApp, Telegram), the agent sends you a YES/NO question and waits for your response via a dedicated clawbands_respond tool.

// Key Features

// Security Policies

| Policy | Behavior |

|---|---|

| ALLOW | Execute immediately with no prompt (e.g., file reads, glob) |

| ASK | Pause agent and prompt for human approval (e.g., file writes, shell commands) |

| DENY | Block automatically with no option to override (e.g., file deletes) |

The default "Balanced" policy allows file reads, asks on writes and shell commands, and denies file deletes. Network requests (fetch, download, webhook) default to ASK. Everything unmapped defaults to ASK, which is a sensible fail-secure approach.

// Protected Tool Categories

| Module | Tools Intercepted |

|---|---|

| FileSystem | read, write, edit, glob |

| Shell | bash, exec |

| Browser | navigate, screenshot, click, type, evaluate |

| Network | fetch, request, webhook, download |

| Gateway | listSessions, listNodes, sendMessage |

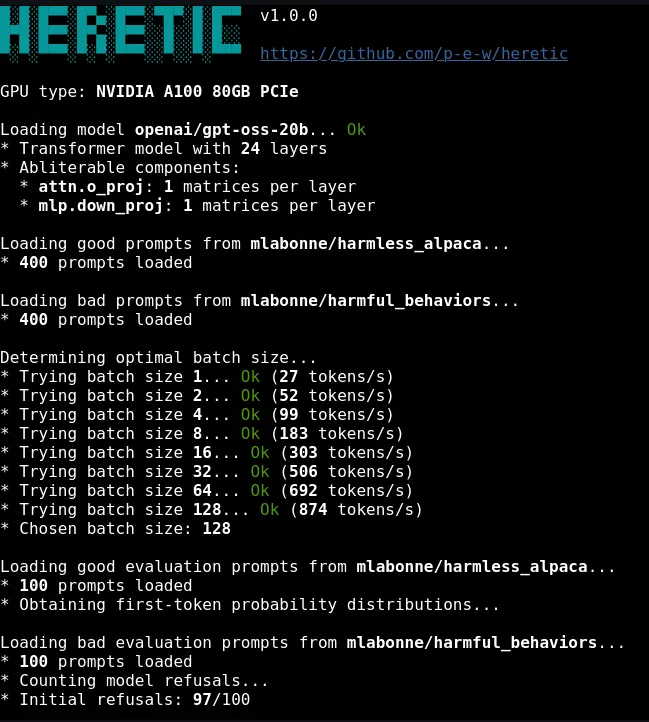

// Architecture

// Why This Matters Right Now

The core insight behind ClawBands is that container-level isolation isn't enough for AI agents. An agent running inside a Docker container is still dangerous if it has API keys, database credentials, or access to messaging platforms. The threat model isn't "agent escapes the sandbox" but rather "agent does something harmful within its authorized scope."

By intercepting at the tool-call level rather than the OS level, ClawBands provides defense-in-depth that complements existing containerization. The agent can still do everything it's supposed to do, but every sensitive action requires explicit human approval.

// Considerations

OpenClaw-specific. ClawBands is built exclusively for OpenClaw's plugin system. It hooks into the before_tool_call event and the api.registerTool() API. It won't work with other AI agent frameworks without modification.

Human bottleneck. The synchronous blocking model means the agent stops entirely when it hits an ASK policy. If you're running an agent that needs to execute dozens of write operations, you'll be approving each one individually. This is by design (security over convenience), but it's worth understanding the workflow implications.

Channel mode trust model. In WhatsApp/Telegram mode, the agent relays your approval decision via the clawbands_respond tool. This means the approval flow passes through the agent itself, which is worth considering from a security perspective.

// Bottom Line

ClawBands fills a gap that the OpenClaw ecosystem clearly needs. As AI agents get more capable and more people deploy them with access to real infrastructure, the question of "what happens when the agent does something you didn't intend" becomes urgent. ClawBands offers a straightforward answer: nothing happens without your explicit permission.

It's early days for this project, but the approach is sound. A lightweight, in-process middleware that enforces human-in-the-loop approval with granular policies and a full audit trail is exactly the kind of tooling the AI agent ecosystem needs as it matures. If you're running OpenClaw in any environment where the agent has access to sensitive resources, this is worth installing.